Projecten

Bekijk alle projecten van de Hogeschool van Amsterdam. Zoek je een bepaald project? Gebruik dan de zoekfunctie bovenaan de pagina.

M-DPP - Digitale paspoorten voor traceerbare kleding

-Het M-DPP-project ontwikkelt een digitaal productpaspoort voor textiel, gebaseerd op moleculaire data. Voor eerlijke, herleidbare en circulaire mode

RECOVER

-RECOVER ontwikkelt begeleiding op maat voor mensen met kanker vóór en na een operatie. Het project verbetert herstel, zelfredzaamheid en samenwerking in de zorg

Bruggen bouwen rond de basisschool - Hoe onderwijs en welzijn samenwerken om ouders en kinderen te ondersteunen

-In dit project worden casussen onderzocht die zich richten op samenwerkingsverbanden tussen basisscholen en welzijnspartners om armoede onder te signaleren

Het LESSEN Project

-Project LESSEN maakt AI toegankelijker met chatbots die minder data nodig hebben, veilig omgaan met informatie en hun keuzes duidelijk uitleggen.

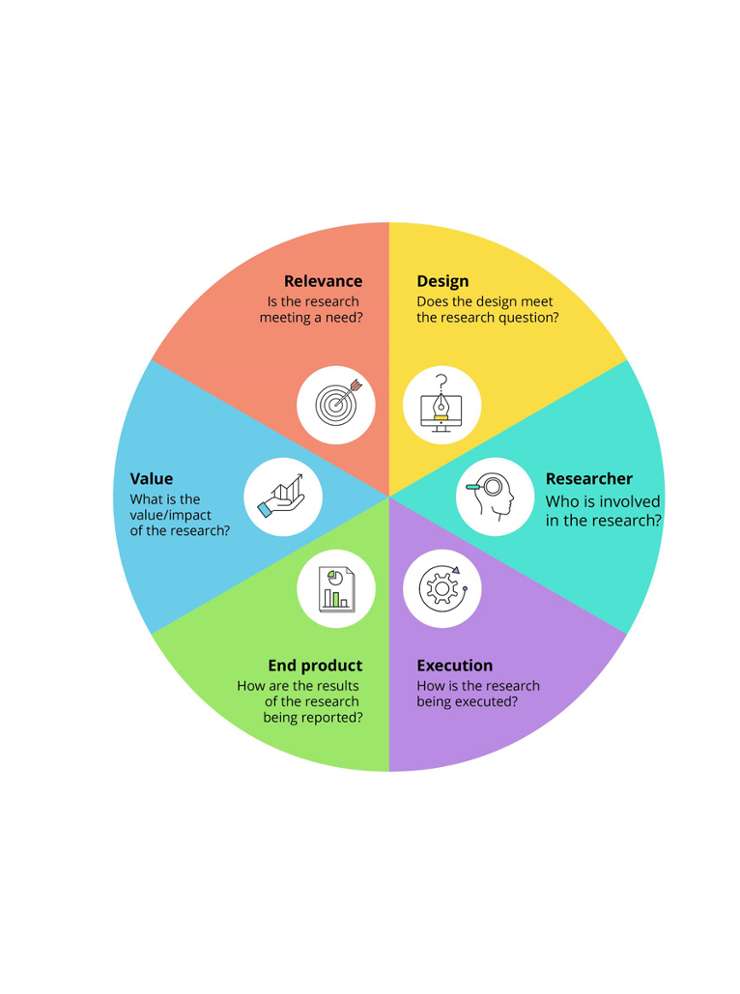

Creating the Desire for Change in Higher Education - Tools

-Instrumenten en workshops voor de integratie van onderzoek in onderwijs.

UrbanSWARM: nature-based solutions voor circulaire en toekomstbestendige steden

-UrbanSWARM transformeert onbenutte stedelijke ruimte met impactvolle nature-based solutions om klimaatverandering, waterschaarste en ongelijkheid aan te pakken.

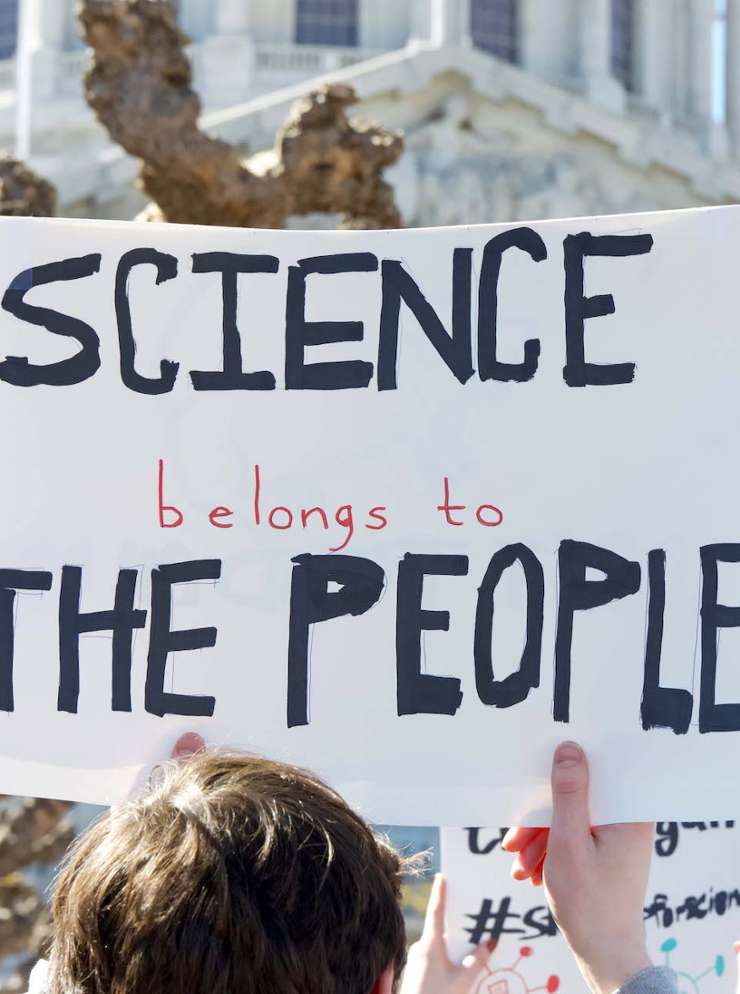

eXtreme Citizen Science Hub Amsterdam

-Citizen science is onderzoek mét en dóór burgers. Dit project zet een hub op in Amsterdam die citizen science-initiatieven verbindt en samenwerking versterkt.

Beter productadvies in de winkel dankzij sociale robot met AI

-Project over de inzet van sociale robots met AI om productadvies in mkb-winkels te verbeteren, met inzichten voor klantbeleving, dienstverlening en retailinnova

Moralis Machina: In gesprek over generatieve AI met een kaartspel

-Een speelse en veilige manier om de impact van AI op overheidswerk te verkennen.

Digital Tools for the Community Economy

-Digitale tools voor de gemeenschapseconomie: onderzoek naar energie-datacommons, duurzame governance en opschaling van stedelijke commons.

ShoppingTomorrow 2025: Gezondere voedingskeuzes met in-store technologie

-In-store technologie helpt klanten gezonder kiezen. Deze bluepaper toont hoe supermarkten stap voor stap verantwoord kunnen starten, meten en opschalen.

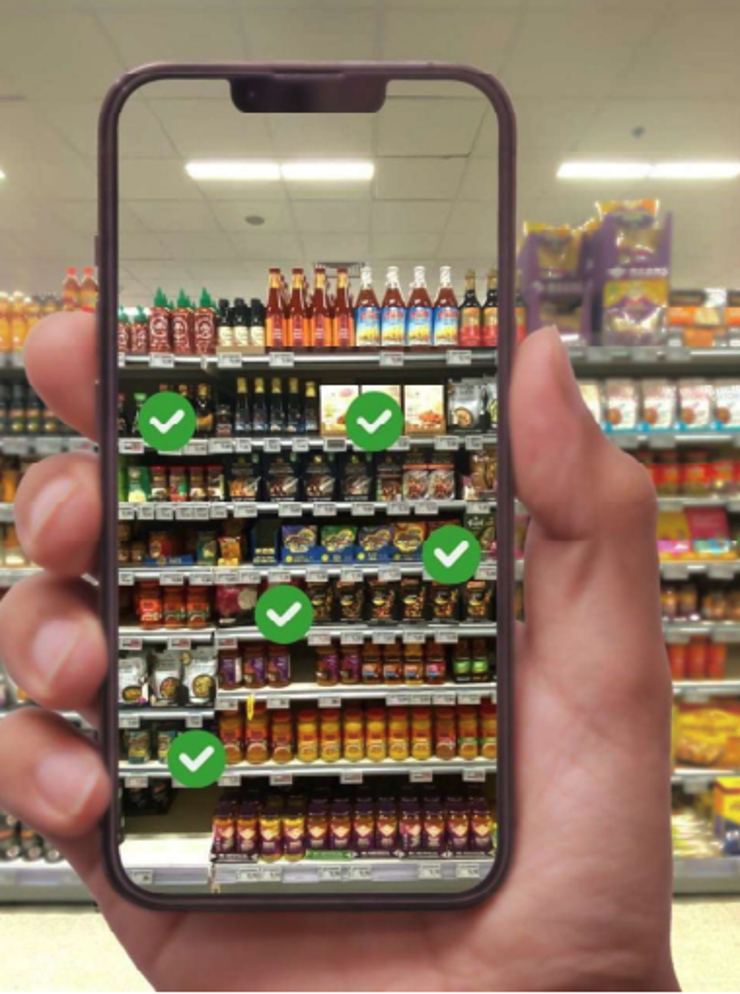

Gezondere voedingskeuzes in de supermarkt met Augmented Reality

-Gezonde keuzes in de supermarkt met WebAR: onderzoek hoe smartphone-technologie klanten helpt sneller en makkelijker gezonder te kiezen in mkb-supermarkten.

You and AI: Designing AI-Equipped Jobs in Finance

-You and AI onderzoekt hoe organisaties mens en AI samen het werk laten vormgeven, met praktische tools voor eerlijke, toekomstbestendige banen.

Symfonie Foetsie - sociale veiligheid op de werkvloer

-Ontdek hoe het gameprototype Symfonie Foetsie teams helpt om red flags te herkennen en sociale veiligheid op de werkvloer te verbeteren.

Burgerschapsonderwijs ontwikkelen en monitoren in po, go en vo

-Onderzoekers ontwikkelen en monitoren burgerschapsonderwijs in het basisonderwijs, voortgezet en speciaal onderwijs

Technische vakmensen voor de energie-infrasector

-De energietransitie vraagt vakmensen. Dit project onderzoekt hoe organisaties mensen kunnen aantrekken, behouden en ontwikkelen om de transitie te versnellen.